Are you finding it extremely difficult to get the desired website rankings on Google, Bing, and other search engines? In a previous article, we discussed some basic SEO problems that can hurt your website rankings. If you have not read it already, then you may want to look at it first. Let us now discuss some advanced SEO problems that require expert handling.

Table of Contents

The common SEO issues that hurt rankings

Issue 1: Page Not Found (404) Errors

A Page not found (soft 404) error simply means that Google tried to access a page on your website, but for some reason, it was unable to do so.

Soft 404 Errors can crop up due to various reasons. The page might have been deleted, the URL has been changed without putting a proper redirect, or it might even happen due to server problems.

Solution:

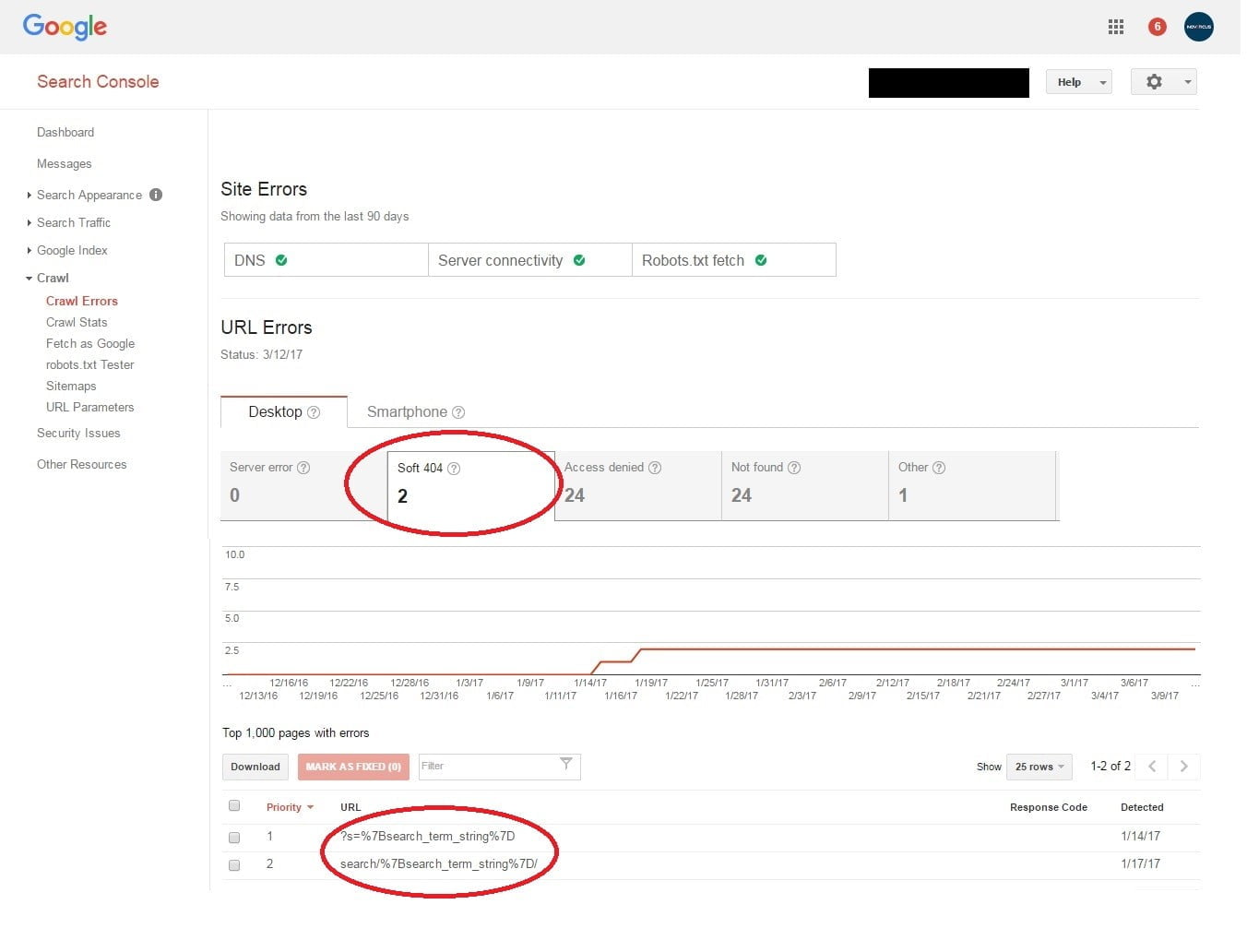

You can easily find out the list of pages with soft 404 errors from Google Search Console. These errors are available under crawl > crawl errors. Google will show you all the pages that are inaccessible from a desktop as well as a smartphone, and will also give you the date on which the problem was detected. Analyse each of these errors and try to manually visit every page to see whether they exist or not. If the page is in there, then put a permanent (301) redirect to the next best page or the homepage, so that the soft 404 errors go away.

Issue 2: Site Map Is Unavailable/Not Updated Regularly

A sitemap helps the search engine crawlers to recognise your website better and discover all its pages properly. A missing or incomplete sitemap will result in many pages not being found, and hence not being indexed by them. Unless you install a complete sitemap and update it regularly, your website rankings are bound to suffer in a major way.

Solution:

Keeping the website sitemap up to date, hugely improves the crawling efficiency of the search bots, and enables them to find all the pages on your website, especially the recently created or updated ones.

Unless you are using WordPress and an automated SEO plug-in, you might have to create and update the XML sitemap of your website manually. If you’re using WordPress, install the Yoast SEO or All In One SEO Pack plugin. Otherwise, you can visit the XML Site Map Generator website to create a sitemap and install it manually.

Thankfully, this is one of the advanced SEO problems that are relatively easier to fix, and just require you to be a little disciplined.

Issue 3: Your Website Is Not Mobile Friendly

In April 2015, Google updated their search algorithms to check for the mobile-friendliness of websites. Websites that are mobile-friendly are built on a responsive framework and will render well on different screen sizes. This eliminates the need for the user to pinch and zoom in to read the contents. Any Web site is not responsive have seen a drop in their website rankings for providing a low user experience to the users.

Remember this is one of those advanced SEO problems that you will need to fix right now!

Solution:

First, use the Mobile-Friendly Test by Google to check whether your site is responsive or not.

If your website is not compliant, then you will either have to redevelop your website on a responsive framework or create a separate mobile website, which I would not recommend for various reasons. You can also create Accelerated Mobile Pages (AMP) to make your website load faster on mobile devices.

Issue 4: Your Site Features Duplicate Content

The issue of duplicate content on a website is more common than you would believe. When several pages on your website have different URLs but identical or very similar content, then Google will not know which one of these pages to rank higher. Therefore, the search rankings of all of these pages suffer, and the overall website rankings go down.

In many cases, the duplicate content problem happens because the same page gets shown under different URLs.

For example, say you have a blog post titled “How To Do SEO”.

The original URL of this article is http://xyz.com/how-to-do-seo.

This blog is filed under the blog category named “SEO”, so it is also accessible through the URL: http://xyz.com/category/seo.how-to-do-seo.

Google will treat these as separate pages having the same content. As a result, none of the URLs will be ranked high by Google.

Solution:

Check whether your website has a duplicate content problem with a tool like Siteliner.

One of the common ways to deal with duplicate content is to use canonical links. Place the rel= canonical tag to the pages to tell Google which one of the two URLs has to be indexed. This will ensure that no link juice is lost due to the problem of duplicate content.

Issue 5: Web Page Not Canonicalized

Canonicalization refers to making your site accessible through either the www or the non-www version of the URL, and not both. It is also a great way to tell the search engines which one to pay attention to. For example, if your website can be accessed through both http://homepage.com and http://www.homepage.com, then it can confuse the search engines and cause a drop in the rankings.

Solution:

Ensure that your site contains a rel=canonical tag in the .htaccess file from the non-preferred URL to the preferred URL of your website. If you are placing it on a particular page, then the code has to be placed on the section of the page.

Redirects can be of two types:

a. wWW to non-wWW

If you want to keep http://homepage.com, then install a redirect towards it from http://www.homepage.com. The URL format will be: “link rel=” canonical” href=”https://homepage.com”

b. non-www to www

Alternatively, if you want to keep https://www.homepage.com, then install a redirect towards it from https:// homepage.com. The URL format will be: “link rel=” canonical” href=”https://www.homepage.com”

Issue 6: Incorrect Robots.txt

The robots.txt file is extremely important for every website, it has a direct impact on search engine optimization. It guides the search engine crawlers regarding whether to index the pages or not. If your robots.txt is poorly programmed, then URLs that you want to be crawled might get blocked, and those you want to block from the crawlers might get crawled. This can result in immense problems in search engine optimization.

Solution:

Check the structure of your robots.txt with a tool. The easiest one to find is the robots.txt tester within the Google search console. If any programming errors are detected, then correct them, and upload the new robots.txt to your web server as soon as possible. It will be a good practice to regenerate the sitemap thereafter, submit it to the different search engines and request for a re-crawling.

Get an expert to fix these advanced SEO problems

These were the advanced SEO problems that might have crept into your website and is hurting its rankings in the search engines.

If your website is facing any of these Advanced SEO problems, then you need to act now!

Consult an SEO expert or a Digital Marketing Agency to help you in correcting these advanced SEO problems and for ensuring that your website ranking steadily goes up.

If you need our help, then the friendly team of Inovaticus Marketing Solutions can always be there at your service. Take a look at our Search Engine Optimization services, and contact us to find out how we can customize our services for your needs.

The efforts that we will put into fixing these advanced SEO problems will be completely worth the effort since your website will have a higher chance of moving up in rankings in the Search Engine Result Pages.

Leave a Reply